BLINK

Blink was a project for the British charity SightSavers. The aim of this project was to bring awareness to an eye disease called Trachoma. This preventable disease slowly turns your eyelashes onto the pupils over time and as an effect the eyelashes scrap away at the pupils causing the host to become blind. Through the use of digital technologies, Jason Bruges Studio wanted to recreate this effect to help bring to life the reality that these people have to deal with. Our work became the main part of an exhibition regarding Trachoma, which was hosted in the OXO Tower Gallery on SouthBank. The exhibition has 5 screens which all displayed photos donated by photographers. As the viewer stood looking at the photo, their subconscious act of blinking slowly destroyed the photos, in a digital representation of loosing vision.

Over the course of a 10 week period, I solely wrote the backend software to allow for the images to corrode by the act of blinking. Each module / screen contained a 50” LCD screen which was mounted to the exhibition wall. Below the screen there was a cut out which housed the camera used to capture blinks. The cameras used were Logitech BRIO 4K camera. I choose these camera as they allow for 4K image capturing, allowing us to digital crop into the face without loss of quality. There are also rework kits for this camera which allows us to reuse the cameras if we needed it to detect solely infrared. Running all of this was a IMac 4.00Ghz. The IMac was a vital part of equipment since blinks happen in milliseconds speed, so the IMac had to have a fast processing speed to be able to process the images being sent from the camera and recognise the eye as being closed. The majority of the software was the same of the screen however depending on the images, certain parameters had to change. Each IMac ran 3 pieces of software, a python script, an Openframeworks application and an MQTT broker.

The python script was used to capture the blinks from the person. Using OpenCV and DLIB Machine learning, the script took the images from the camera and tried to find a face in the frame by using a 48-landmark recognition machine learning model. In early testing I found that the machine learning model is amazing for recognising multiple faces, which causes problems as we want the program to only identify one person at a time. To achieve this, the program would find the person who had the largest face surface area. Normally this would be someone closest to the picture/camera. Once a face was found, the script would zoom into the face and apply another machine learning model to detect the eyes. Once the eyes were detected 8 landmark points were assigned to the eyes to create an Eye-Aspect-Ratio (EAR) value. When the eye is open, you get a higher EAR value than when the eye is closed. An easy way to think of this is that it measure the surface area of your pupil and returns a value. When the EAR value is below a certain threshold for x number of frame, it is counted as your eye being closed and adds a blink. To ensure that the all races of people could use this, the threshold value would dynamically change based on the EAR values received over 2 seconds.

The python script tracked the amount of blinks which were received and this value were sent to the openframeworks script via MQTT messages. MQTT messages allow for QOS, meaning that they will always be retrieved by the application its being sent to. MQTT are similar to OSC messaging however they are used for IOT devices.

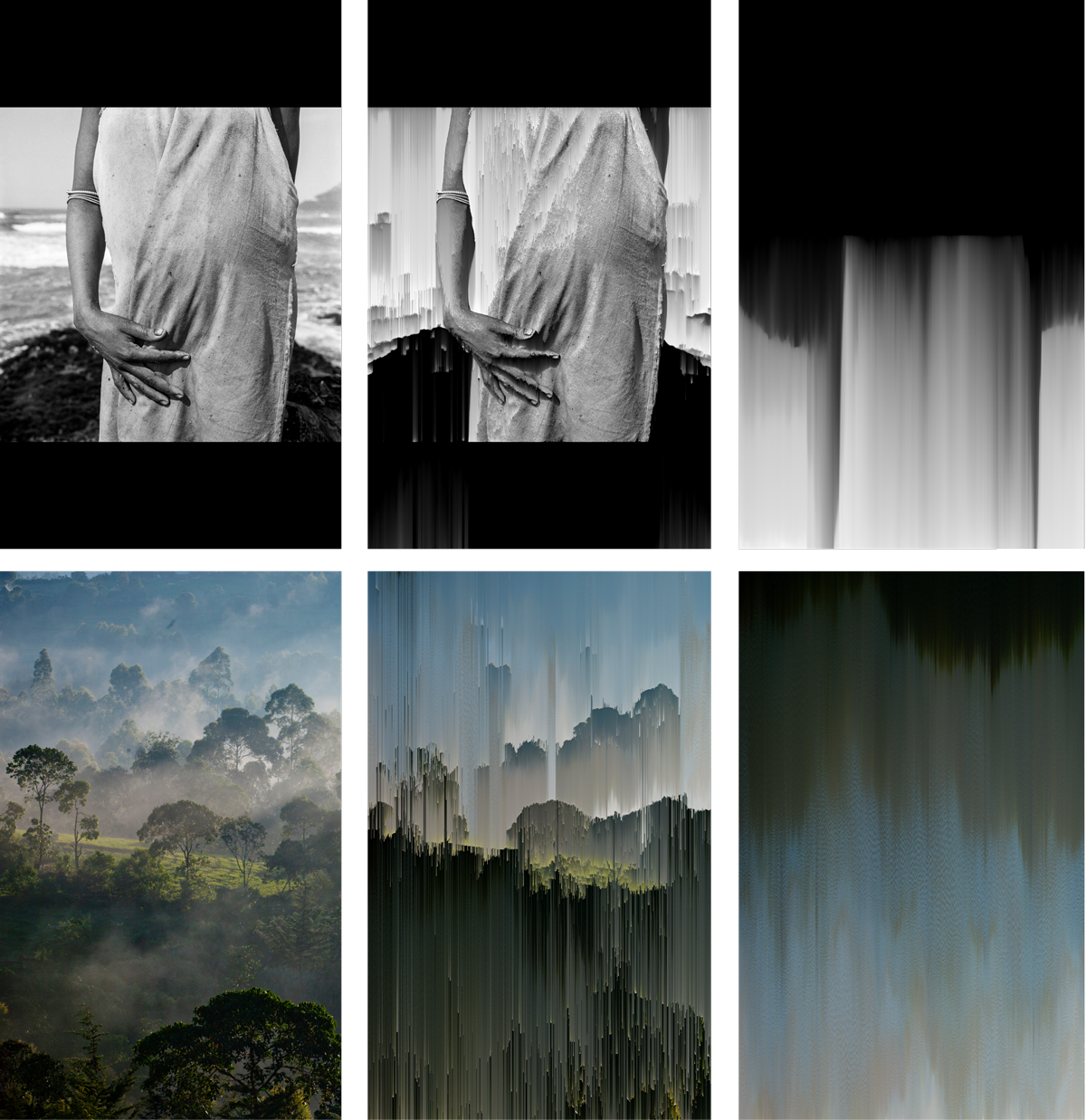

The OpenFrameworks application displayed the photo and used a pixel sorting algorithm to sort/destroy the image. With each blink, a column of the 4k image is sorted. Once it reaches the end of the image then it loops back to the start. Pixel sorting works by sorting the pixels in an image in highest to lowest order. The pixel sorting stops when a pixel is below a certain value, and starts again when another value is achieved. The result ends up being an interesting “glitch” effect on the photos. Each image had different thresholds for the pixelsorting algorithm as each photo had different brightness values, and different amount of black and white pixels. Each photo was given starting values for the pixelsorting algorithm and an end value at 27,000 blinks. The algorithm worked its way to the end value over the course of the exhibition.